Kafka

Kafka

Event streaming platform

Real-time data processing

Scalable message broker

Fault-tolerant storage

Pub-sub & message queuing

High throughput, low latency

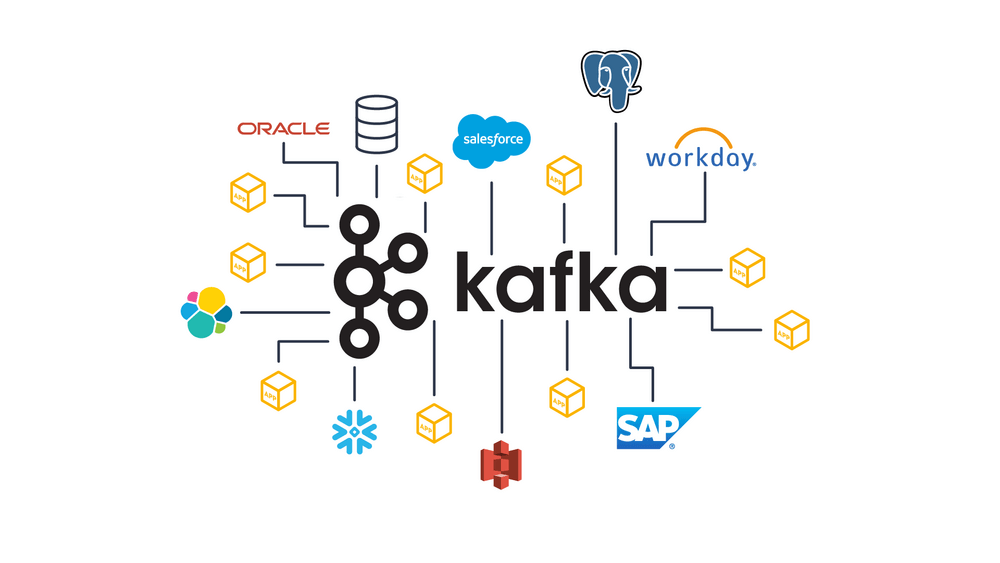

Apache Kafka is a leading distributed event-streaming platform designed to help organizations build scalable, real-time data pipelines and event-driven architectures. As a high-throughput and fault-tolerant messaging system, Kafka empowers enterprises to process, analyze, and act on data streams with speed and reliability.

⬤ Why Kafka?

Kafka enables seamless data flow across modern digital ecosystems. Its robust architecture supports continuous data ingestion from diverse sources and ensures durability through distributed, partitioned logs. This makes Kafka an ideal backbone for mission-critical applications that require real-time insights.

⬤ Key Capabilities

- High-Throughput Event Streaming

Efficiently handles millions of events per second, enabling real-time processing at enterprise scale. - Scalable & Distributed Architecture

Built to grow with your business. Kafka’s partitioned, replicated clusters offer horizontal scalability and high availability. - Reliable & Durable Messaging

Ensures data persistence with fault-tolerant storage, making it ideal for long-running and mission-critical workflows. - Flexible Integration

Integrates seamlessly with modern data processing frameworks such as Apache Spark, Apache Flink, Elasticsearch, and cloud-native services. - Real-Time Analytics & Processing

Powers time-sensitive applications such as fraud detection, IoT data pipelines, operational monitoring, and user behavior analytics.

⬤ Business Use Cases

- Event-Driven Microservices

Enables loosely coupled, high-performance services to communicate via reliable event streams. - Data Pipeline Modernization

Acts as a central hub for streaming ETL and real-time data synchronization across cloud and on-prem systems. - IoT Streaming & Telemetry

Supports continuous ingestion and analysis of large-scale sensor and device data. - Log Aggregation & Monitoring

Consolidates logs from multiple systems for centralized observability and operational intelligence.

⬤ How We Help

As a technology consulting partner, we help organizations design, implement, and optimize Kafka-based solutions tailored to their business goals.

Our services include:

- Architecture design & platform implementation

- Real-time data pipeline development

- Migration from legacy messaging systems

- Performance tuning & capacity planning

- Managed Kafka services and ongoing support

FAQs

- What is Apache Kafka used for?

Kafka is used for real-time data streaming, log processing, event-driven architectures, and messaging in large-scale applications. - How does Kafka ensure fault tolerance?

Kafka replicates data across multiple brokers, ensuring fault tolerance and data durability, even if a node fails. - What is the difference between Kafka and traditional message queues?

Unlike traditional message queues, Kafka persists data for a configurable time, allowing multiple consumers to read messages independently. - Can Kafka handle large-scale data processing?

Yes, Kafka is designed for high-throughput and low-latency processing, making it suitable for big data and IoT applications. - How does Kafka handle scalability?

Kafka scales horizontally by adding more brokers and partitions, allowing it to handle millions of messages per second efficiently.